There is a LOT of experimentation required when it comes to marketing your business. You really need to get to know your audience and see what they respond better to. Luckily for you, A B split testing allows you to do just that!

A/B testing (a.k.a. split testing) is a simple experiment that allows you to observe user behavior in two similar alternatives, A and B. The alternatives for digital marketing can be different text, images, layout, etc. Hopefully, you would get statistically significant (ie. valid) results from doing so. The results would tell you which alternative you should stick to in the future to obtain the best results.

Why A B Split Testing?

While A/B testing can be applied to many types of experiments, it is most common in digital marketing. Big names like Google and Amazon use this simple technique! Here are some reasons why:

A B Split Testing is based on the real numbers

A/B Testing allows you to quit making assumptions. Real data is used along with cold, hard math in order to come up with statistically significant results. You can simply look at which option people chose more, but that doesn’t paint the full picture. It could be just a fluke! The more advanced math involves applying statistical significance tests to make sure the results were not a coincidence. The numbers don’t lie!

It works for everyone, no matter how big or small

Businesses of all sizes use this method, whether they are a small business with a thousand monthly page-views or a multi-billion dollar companies like Google. Fun fact: it has been said Google performs over 7000 A B tests on their platforms in a year!

Google may have millions of users in their data sample, and I’m assuming that won’t be the case for you. You can get a decent indication of what users prefer more even with samples as small as several hundred users. Of course, when performing the test, the more the merrier.

It is cheap to run and easy to set up

There are many free resources to perform A B split testing for small businesses. Most notably, Google Analytics has free A B split testing tools for websites of up to a certain size.

It is not that difficult to set up either, as many of the platforms used for testing are very user-friendly. You don’t need to be a programming guru to implement this into your marketing strategy. The hardest part is probably picking the two alternatives!

A/B testing can be used on many marketing techniques

Here is a large yet incomplete list of items you can perform a simple A/B test on, just so you get the idea. Afterwards, we’ll look at email marketing in particular since it is very straightforward. A B split testing can be used to improve:

- Email marketing

- Landing pages

- Lead magnets

- Website layout (headers, text, images, etc.)

- Data entry forms

- Facebook advertisements

- E-commerce / transaction process

- And more!

Example: Using A B Split Testing for Email Marketing

Let’s take a look at email marketing since it is easy, relatable, and effective. A survey of Mailchimp users found that A B testing improved e-commerce revenues by up to 20% with marginal effort!

For simplicity’s sake, let’s say your small ecommerce business has a mailing list with 2000 subscribers. You use your mailing list to inform customers of promotions and such that you’re offering. You want to see how you can improve your conversion rate through email and decide to use the A/B testing feature on ActiveCampaign. This will allow you to find which subject line makes users more likely to open the email. The two subject lines are:

- Option A: Save 20% at [company name] for a limited time only

- Option B: Hey [first name], get 20% off until July 15!

The body of the emails is the same for both alternatives, only the subject line changes. Option A is what is currently used, and option B is what we will try out to see if it works better. You have a hunch that this subject line may work better, since personalized email subject lines are opened up to 26% more. Remember, don’t just assume, use A/B testing to confirm!

Performing the A/B test

It is time to perform the test. 1000 (half) of the subscribers receive option A, the other 1000 receive option B. It is important all other variables (ie. email delivery time, email body content) remain the same to ensure the test is more accurate.

After a set period of time, say one day, the data collection is done and it’s time to interpret the results.

Analyzing the results

So, how did the two options do?

- Option A: 12.0% open rate (120 users), 1.1% click-through rate (11 users)

- Option B: 15.5% open rate (155 users), 2.0% click-through rate (20 users)

Remember: open rate is the percent of people who open the email up, and click-through rate is the percent of people who click the link in the email.

Just by looking at the numbers, it appears that option B had better results. You could try and draw a quick conclusion saying that you had 35 more opens and 9 more click-throughs as a result. The real question, however, remains to be answered; are the results statistically significant?

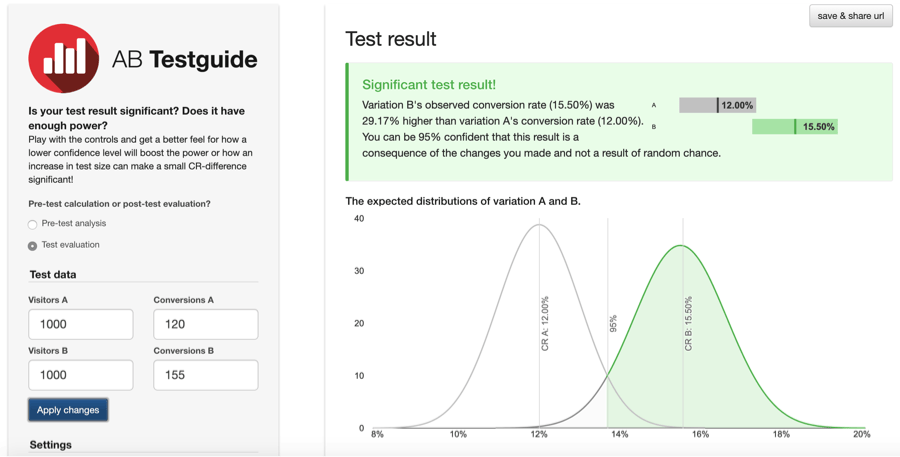

You’ll need to do some statistical analysis to do that. Thankfully there is an awesome site that you can use regardless of your knowledge in stats! We will use the AB Testguidecalculator to see if our results are more than just luck.

I inputted the data for open rates on the left. As you can see, the results are significant with a 95% confidence interval! For those not familiar, this is effectively saying “we are 95% sure.” You can change the interval in the settings between 90%, 95%, and 99%, however I recommend keeping it at 95 for the time being.

The conclusion made is this: Option B improved the email open rate by 29% relative to option A. This was determined with a confidence interval of 95%. Now, we know email opens were improved (as we’d hoped), but what about click-throughs?

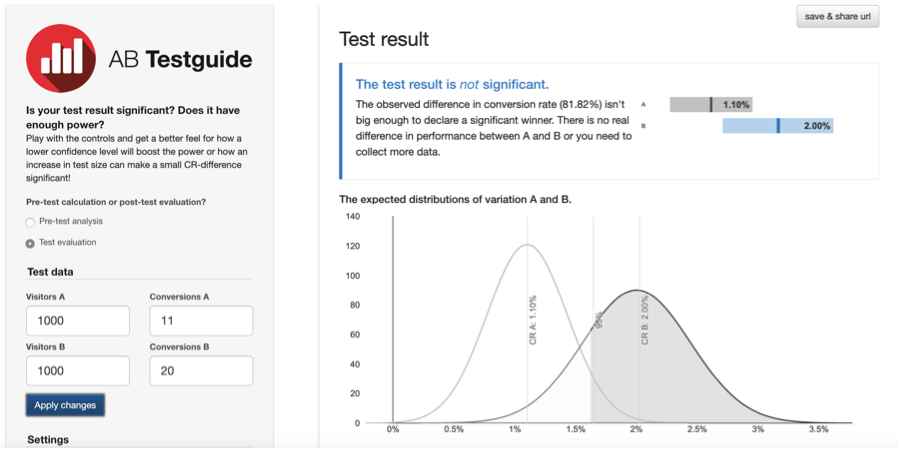

Sadly, the results were not significant here. This means that the change wasn’t strong enough to prove that option B has higher click-through rates than option A. It could’ve been a coincidence!

After doing this experiment, you can still conclude that option B leads to higher open rates and should be your new default email subject line.

Conclusion and Next Steps

These are the very basics of A B split testing, otherwise known as split testing. You can apply this to many areas of digital marketing such as landing pages, Facebook ads, and more. Each application has different variables and parameters to work with, which will be discussed in detail.

Once you master A B split testing, you can even try more advanced experiments. One example is multivariate testing. This is like A B split testing, just with more than two options!